When deploying ADFS for SharePoint, there are a few considerations. Using ADFS for SharePoint is not just another authentication method, if will affect quite a few functionalists that we even take for granted. Here are some of the common situations that SharePoint customers will encounter when they implement ADFS for SharePoint.

Zoning for web applications.

Check out the dedicated

blog post for a detailed discussion about this topic. Very likely, you will need to use ADFS in a non-default zone. The root cause is that search crawl does not work without Window Authentication.

People picker

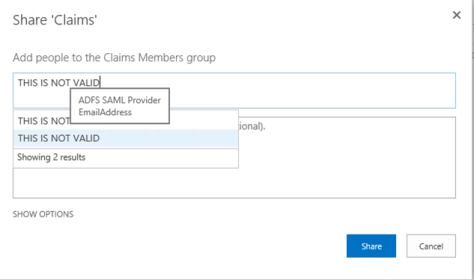

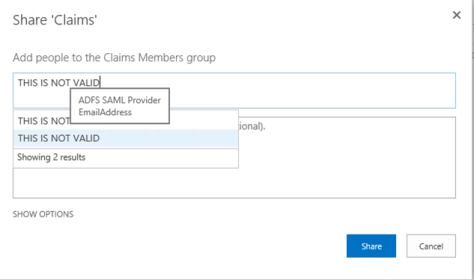

People picker is not working properly in the zone that uses ADFS. It is accepting all the user claims. Please refer to the attached screenshot for one example. Basically, anything users type in the People Picker will be accepted although there is no such user in Active Directory, just like in the picture below:

This is a known issue. As stated on TechNet (http://technet.microsoft.com/en-us/library/gg602078(v=office.15).aspx), “when you use SAML token-based authentication, all queries entered in the text box are automatically displayed as if they were resolved, regardless of whether they are valid users or groups”. Therefore, we need to implement a custom claims provider in SharePoint, so SharePoint could query AD directly and the People Picker will work properly. We could find from the internet a sample of such custom claims provider: http://ldapcp.codeplex.com/.

User Profile Sync

You will need to configure User Profile Service Application separately for ADFS. Otherwise, users authenticated through ADFS will not be able to have their profiles synced from AD and consequently not populated to the site collections. They will not be able to use the functions dependent on user profiles, for example outgoing email service (simply because there is no email address in the user profile).

After the basic setup of ADFS for SharePoint, a User Profile Synchronization connection and a User Property mapping needs to be set up to make sure ADFS users’ properties are synced. Here are the steps to create a Profile synchronization connection to a directory service for ADFS users.

a.On the Add new synchronization connection page, type the synchronization connection name in the Connection Name box: User Profile Sync for ADFS.

b.From the Type list, select the type of directory service to which you want to connect.

c.Fill in the Connection Settings section according to the directory service to which you are creating a connection.

d.In the Forest name box, type the name of the forest.

e.Click Specify a domain controller and type the domain controller name in the Domain controller name box.

f.In the Authentication Provider Type box, select Trusted Claims Provider Authentication.

g.In the Authentication Provider instance box, make sure ADFS is selected.

h.In the Account name box, type the synchronization account domain\sp.profile.

i.In the Password box, type the password for the synchronization account.

j.In the Confirm Password box, type the password for the synchronization account again.

k.In the port box, enter the connection port 389.

l.In the Containers section, click Populate Containers, and then select the containers from the directory service that you want to synchronize.

m.Click OK.

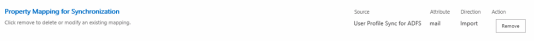

Here are the steps for mapping User Properties for ADFS users.

a) On the Manage Profile Service page, in the People section, click Manage User Properties.

b) Click Claim Provider Identifier and choose Edit.

c) The mapping should have been configured automatically when the Synchronization connection was created. Make sure attributed ADFS is imported for the Connection User Profile Sync for ADFS.

d) Click OK.

e) On the Manage User Properties page, click claim User Identifier.

f) For the source User Profile Sync for ADFS, import email as the Claim User Identifier.

Audience:

For the users authenticated through ADFS, Audience does not work even your have synchronized user profilers to SharePoint. You will need to create users manually or implement custom codes. If you would like to create users manually, create the profiles with their proper claims attributes—for example, i:05:t|AD FS with

roles|name@contoso.com as the account name—and then populate the other fields with data that you want to use in your audiences. However, without implementing custom codes, you cannot use a user-based audience, such as membership in a group, for the audience. You implement custom code in order to do that. It might be more efficient to use the property-based audience in this case.

This TechNet article mentioned this limitation:

http://technet.microsoft.com/en-us/library/dn720355(v=office.15).aspx

Other known issues:

A few things don’t work, such as search alerts. Search Alerts does not work with SAML security tokens (generated by ADFS). On TechNet, there is an article that covers this topic for SharePoint 2010:

http://technet.microsoft.com/en-us/library/hh706161.aspx There seems no improvement in this area in SharePoint 2013.

There are more issues if you are using an older version of SharePoint or ADFS. However, the good news is there are some updates if you are using SharePoint 2013 and ADFS 3.0. For example, check out this blog for how to make SharePoint hosted apps work with ADFS 3.0:

http://www.wictorwilen.se/sharepoint-2013-with-saml-claims-and-provider-hosted-apps

Alerts

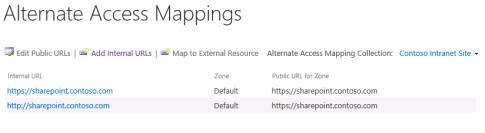

If there have multiple zones, and the users subscribe to an alert from a non-default zone, in the email they receive about the alerts, the links are linked to the default zone. This can only be resolved by custom codes. For a solution, you could check out this blog post:

https://timpanariuovidiu.wordpress.com/2014/06/23/sharepoint-2013-alerts/

Groups claims

For the LDAP attributes being passed to SharePoint as outgoing claims, you could pick up the one you need. You could just use one sole attribute if you only would like to authenticate individual users. You could for example only pass Email address, and all users will be able to get authenticated with their email addresses. However, if you could like to grant permissions to security groups in AD for ADFS users, you will need to pass group claims. The way to do it is to firstly in ADFS, make sure the LDAP attribute “Token-Groups – Unqualified Names” is mapped to the outgoing claim type “Role”. In this case, the sAMAccountName of the security groups will be passed to SharePoint as plain text.

BTW, if you forget to do this when the trusted token issuer (ADFS) was created, you could either recreated it including the “role” claim mapping or just update the existing one. Here is the script to use:

$issuer = Get-SPTrustedIdentityTokenIssuer

$issuer.ClaimTypes.Add("http://schemas.microsoft.com/ws/2008/06/identity/claims/role")

$map=New-SPClaimTypeMapping "http://schemas.microsoft.com/ws/2008/06/identity/claims/role" -IncomingClaimTypeDisplayName "Role" -SameAsIncoming

$issuer.AddClaimTypeInformation($map)

$issuer.Update()

For the URI of each type of claim, you could refer to this article:

http://technet.microsoft.com/en-us/library/ee913589.aspx

Browser support

When implementing ADFS for SharePoint, you will encounter a few issues. Some of the actually have been mentioned above. I discovered one issue with Chrome in one the projects, and it has a solution. You could check out this blog for details:

http://jackstromberg.com/2014/03/adfs-v3-on-server-2012-r2-allow-chrome-to-automatically-sign-in-internally/